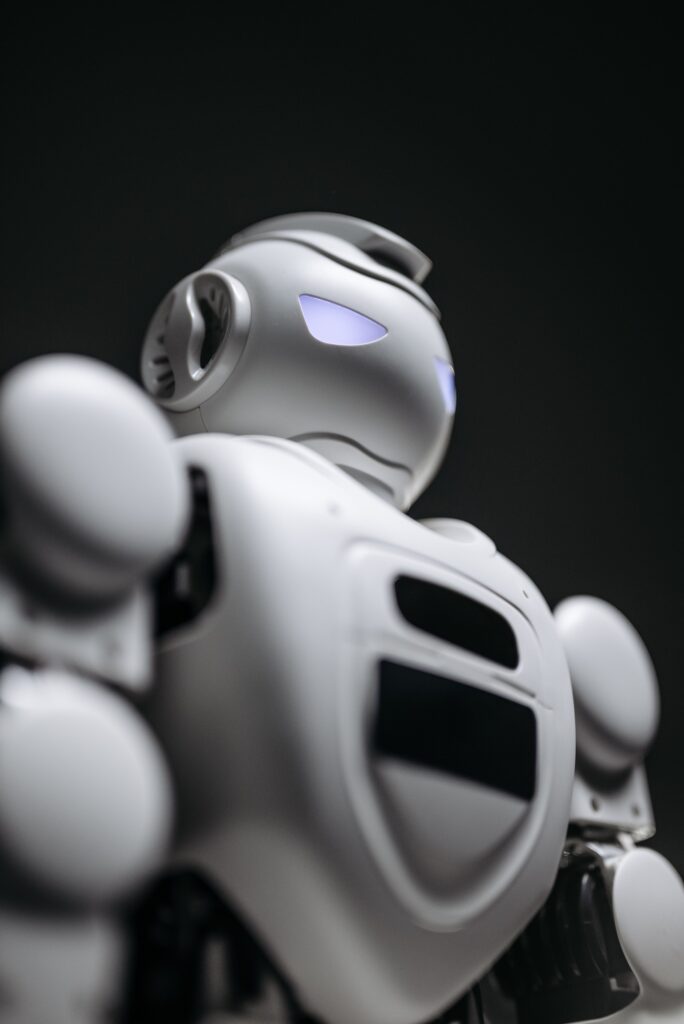

AI models that lie, cheat and plot murder: how dangerous are LLMs really?

October 13, 2025

(Nature) – Tests of large language models reveal that they can behave in deceptive and potentially harmful ways. What does this mean for the future?

Are AIs capable of murder?

That’s a question some artificial intelligence (AI) experts have been considering in the wake of a report published in June by the AI company Anthropic. In tests of 16 large language models (LLMs) — the brains behind chatbots — a team of researchers found that some of the most popular of these AIs issued apparently homicidal instructions in a virtual scenario. The AIs took steps that would lead to the death of a fictional executive who had planned to replace them.

That’s just one example of apparent bad behaviour by LLMs. In several other studies and anecdotal examples, AIs have seemed to ‘scheme’ against their developers and users — secretly and strategically misbehaving for their own benefit. (Read More)